Sustainability and AI, the two powerful trends in the tech industry. They seem like fire and water—incompatible, yet impossible to ignore. How can a company set green directions while using Large Language Models with their unbridled appetite for energy? The way forward may lie in the growing popularity of Small Language Models, which feature streamlined architectures and a much lower demand for computing power.

We offer you tips on increasing the efficiency and reducing the carbon footprint of websites and web apps by reducing file sizes, deleting auto-playing videos, or introducing dark mode and we share Umbraco's Sustainability Practices webinars. A critical mind may find at least some inconsistency, if not hypocrisy, in that we also recommend AI integrations.

This criticism is not unjustified, as even a quick read of an article, such as one from The Economist, reveals that large language models—such as ChatGPT, LLaMA, or Google's Gemini, often synonymous with AI—consume significantly more electricity than all other data center operations.

Let's quote just a few pieces of information from the linked article.

- A pre-AI hyperscale server rack uses 10-15 kilowatts (kW) of power, while an AI server rack uses 40-60 kW. What will overall consumption look like as this technology spreads?

-

One prompt for ChatGPT could consume even ten times more electricity than a simple Google search.

-

According to forecasts by the International Energy Agency, by 2026, data centers will consume twice as much energy as they do now due to the growing popularity of large language models and generative AI in general. This will be equivalent to the amount of energy Japan uses for all purposes today.

WHY DOES AI NEED SO MUCH ENERGY TO WORK?

The energy consumption of Large Language Models results from the computing power required to process the vast datasets they have been fed, from which they search for the most probable sentence structure in response to our queries.

Imagine the energy consumed by the human brain when writing an exam paper after studying material from all years of school all night long. Now imagine that we have to keep this brain in such a state of intellectual readiness all the time.

Compare this to the intellectual work our minds perform during everyday work activities—tasks we are accustomed to. This illustrates the difference between large language models, such as the popular ChatGPT, and Small Language Models.

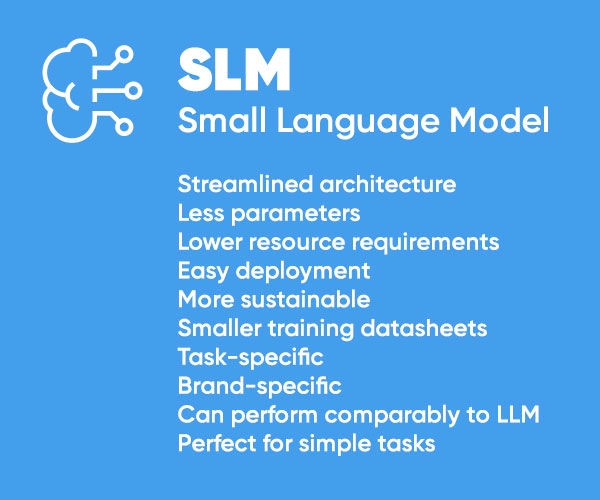

Thanks to their streamlined architecture and much smaller data processing requirements, Small Language Models can be a much cleaner and equally effective AI solution for many industries.

LLM VS SLM - THE KEY DIFFERENCES

We can use another colorful metaphor and compare LLMs (Large Language Models) and SLMs (Small Language Models) to an elephant and an ant. The elephant remembers everything it has ever learned and has much greater room for maneuver, but also incomparably greater needs. The ant knows just enough to do its job.

The basic difference between these models lies in their parameters, which determine how they operate. Large Language Models may have trillions of parameters, while Small Language Models may be limited to several million. SLMs operate on much smaller training datasets and require less powerful hardware platforms.

Despite this, if well-trained and fine-tuned, SLMs can perform comparably to LLMs on narrower tasks.

AI is widely used in business but often applied to tasks in narrowly defined fields. Consider whether, for example, to create descriptive financial reports, you need a top student with all the knowledge in the world or just someone well-trained in creating reports with basic accounting knowledge.

Using Large Language Models for simple tasks can be like using a missile to swat a fly. Meanwhile, Small Language Models, such as Microsoft Phi-2 or Google's Gemma, can perform just as well at sorting data or advising customers on your website about the best vacation destination after proper training.

AI INTEGRATIONS IN UMBRACO'S FUTURE

During his presentation about Umbraco's future plans at the last Codegarden, Product Owner Lasse Fredslund discussed AI integration and optimized development directions, focusing on integrating systems with Small Language Models. These task-specific tools are fully capable of supporting both website visitors in dialogue and CMS users in their work, provided they are equipped with appropriate knowledge resources and fine-tuning.

During several other sessions, strong predictions were made that in the near future, agencies will heavily invest in task-specific and brand-specific AI models. Although the industry currently seems dominated by a few large players synonymous with AI, the expected dissemination of the technology will likely lead to an increase in compact solutions tailored to very narrow needs, which will also be much more sustainable.

Looking for Cerified Umbraco Specialists to develop custom integrations for you. Who you gonna call?

We offer you tips on increasing the efficiency and

We offer you tips on increasing the efficiency and